minIO:基本安裝與設定

開一台 ubuntu/debian LXC

wget https://dl.min.io/server/minio/release/linux-amd64/minio_20220907222502.0.0_amd64.deb

dpkg -i minio_20220907222502.0.0_amd64.deb建立用戶:

sudo useradd -r minio-user -s /sbin/nologin

sudo chown minio-user:minio-user /usr/local/bin/minio

sudo mkdir /etc/minio

sudo chown minio-user:minio-user /etc/minio建立開機自啟動:

nano /etc/systemd/system/minio.service[Unit]

Description=MinIO

Documentation=https://docs.min.io

Wants=network-online.target

After=network-online.target

AssertFileIsExecutable=/usr/local/bin/minio

[Service]

WorkingDirectory=/usr/local

User=minio-user

Group=minio-user

ProtectProc=invisible

EnvironmentFile=-/etc/default/minio

ExecStartPre=/bin/bash -c "if [ -z \"${MINIO_VOLUMES}\" ]; then echo \"Variable MINIO_VOLUMES not set in /etc/default/minio\"; exit 1; fi"

ExecStart=/usr/local/bin/minio server $MINIO_OPTS $MINIO_VOLUMES

# Let systemd restart this service always

Restart=always

# Specifies the maximum file descriptor number that can be opened by this process

LimitNOFILE=1048576

# Specifies the maximum number of threads this process can create

TasksMax=infinity

# Disable timeout logic and wait until process is stopped

TimeoutStopSec=infinity

SendSIGKILL=no

[Install]

WantedBy=multi-user.target

# Built for ${project.name}-${project.version} (${project.name})環境設定:

nano /etc/default/minioMINIO_ROOT_USER="user"

MINIO_VOLUMES="/s3"

MINIO_OPTS="-C /etc/minio --address 0.0.0.0:9000 --console-address 0.0.0.0:9001"

MINIO_ROOT_PASSWORD="password"

MINIO_PROMETHEUS_AUTH_TYPE="public"

MINIO_PROMETHEUS_URL="http://ip:9090"重啟服務:

systemctl daemon-reload

service minio start

systemctl enable minioNginx Proxy:

server {

listen 80;

server_name s3.YOURDOMAIN.com;

location / {

return 301 https://s3.YOURDOMAIN.com$request_uri;

}

}

server {

listen 443 ssl;

server_name s3.YOURDOMAIN.com;

ssl_certificate /etc/letsencrypt/live/s3.YOURDOMAIN.com/fullchain.pem; # managed by Certbot

ssl_certificate_key /etc/letsencrypt/live/s3.YOURDOMAIN.com/privkey.pem; # managed by Certbot

ssl_protocols TLSv1.2 TLSv1.3;

ssl_prefer_server_ciphers on;

ssl_ciphers 'ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:ECDHE-RSA-AES128-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA';

add_header Strict-Transport-Security "max-age=31536000; includeSubdomains;";

server_tokens off;

add_header X-Frame-Options SAMEORIGIN;

add_header X-Content-Type-Options nosniff;

add_header X-Robots-Tag none;

client_max_body_size 10000M;

keepalive_timeout 1800;

location /桶1/ {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Host $http_host;

proxy_connect_timeout 300;

# Default is HTTP/1, keepalive is only enabled in HTTP/1.1

proxy_http_version 1.1;

proxy_set_header Connection "" ;

chunked_transfer_encoding off;

proxy_pass http://ip:9000;

}

location /桶2/ {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Host $http_host;

proxy_connect_timeout 300;

# Default is HTTP/1, keepalive is only enabled in HTTP/1.1

proxy_http_version 1.1;

proxy_set_header Connection "";

chunked_transfer_encoding off;

proxy_pass http://ip:9000;

}

location /桶3/ {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Host $http_host;

proxy_connect_timeout 300;

# Default is HTTP/1, keepalive is only enabled in HTTP/1.1

proxy_http_version 1.1;

proxy_set_header Connection "";

chunked_transfer_encoding off;

proxy_pass http://ip:9000;

}

# Proxy any other request to the application server running on port 9001

location / {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Host $http_host;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_connect_timeout 300;

# Default is HTTP/1, keepalive is only enabled in HTTP/1.1

proxy_http_version 1.1;

proxy_set_header Connection "";

chunked_transfer_encoding off;

proxy_pass http://ip:9001;

}

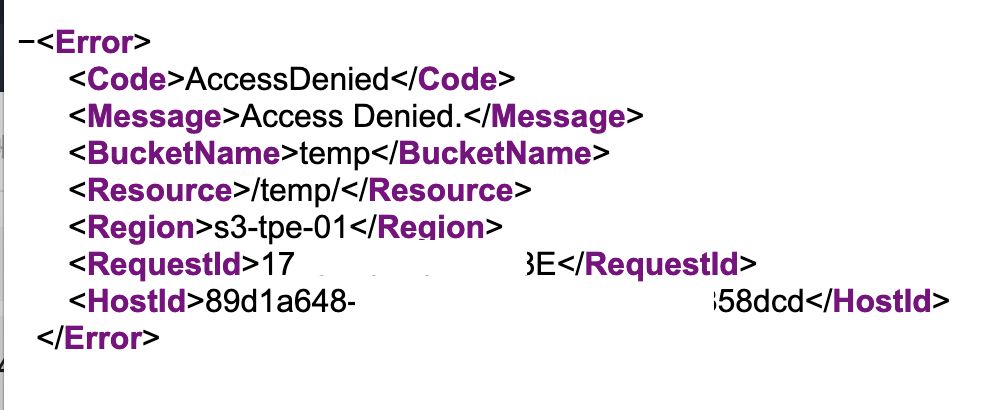

}公開 Buckets:

公開 Buckets並隱藏上層檔案清單

先設定成公開>然後轉成 custom 並拿掉 s3:ListBucket:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": [

"*"

]

},

"Action": [

"s3:ListBucketMultipartUploads",

"s3:GetBucketLocation"

],

"Resource": [

"arn:aws:s3:::test"

]

},

{

"Effect": "Allow",

"Principal": {

"AWS": [

"*"

]

},

"Action": [

"s3:AbortMultipartUpload",

"s3:DeleteObject",

"s3:GetObject",

"s3:ListMultipartUploadParts",

"s3:PutObject"

],

"Resource": [

"arn:aws:s3:::temp/*"

]

}

]

}瀏覽所屬 Buckets 檔案可以正常瀏覽,瀏覽上層則會被拒絕

使用者IAM Policies:

指定 Buckets RW:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowRootLevelListingForUserify",

"Effect": "Allow",

"Action": [

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::temp"

]

},

{

"Sid": "AllowUserToReadWriteForUserify",

"Effect": "Allow",

"Action": [

"s3:DeleteObject",

"s3:GetObject",

"s3:PutObject"

],

"Resource": [

"arn:aws:s3:::temp/*"

]

}

]

}建立 IAM Policies之後,進入所屬用戶管理指定用戶就可以讀取這個桶

只讀權限:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetBucketLocation"

],

"Resource": [

"arn:aws:s3:::test",

"arn:aws:s3:::test2"

]

},

{

"Sid": "AllowRootLevelListingForUserify",

"Effect": "Allow",

"Action": [

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::test",

"arn:aws:s3:::test2"

]

},

{

"Sid": "AllowUserToReadForUserify",

"Effect": "Allow",

"Action": [

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::test/**",

"arn:aws:s3:::test1/**"

]

}

]

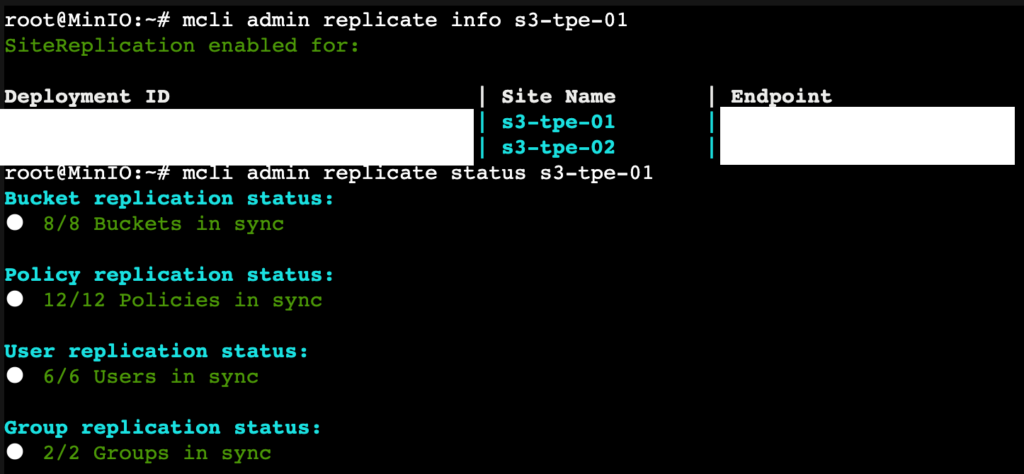

}新增鏡像複製:

安裝終端並設定別名:

mcli alias set s3-tpe-01 http://10.0.1.200:9000 user passwd

mcli alias set s3-tpe-02 http://10.0.1.230:9000 user passwd新增抄寫群組:

mcli admin replicate add s3-tpe-01 s3-tpe-02檢視詳細資訊:

mcli admin replicate info s3-tpe-01檢視同步狀態:

mcli admin replicate status s3-tpe-01

阅读剩余

THE END